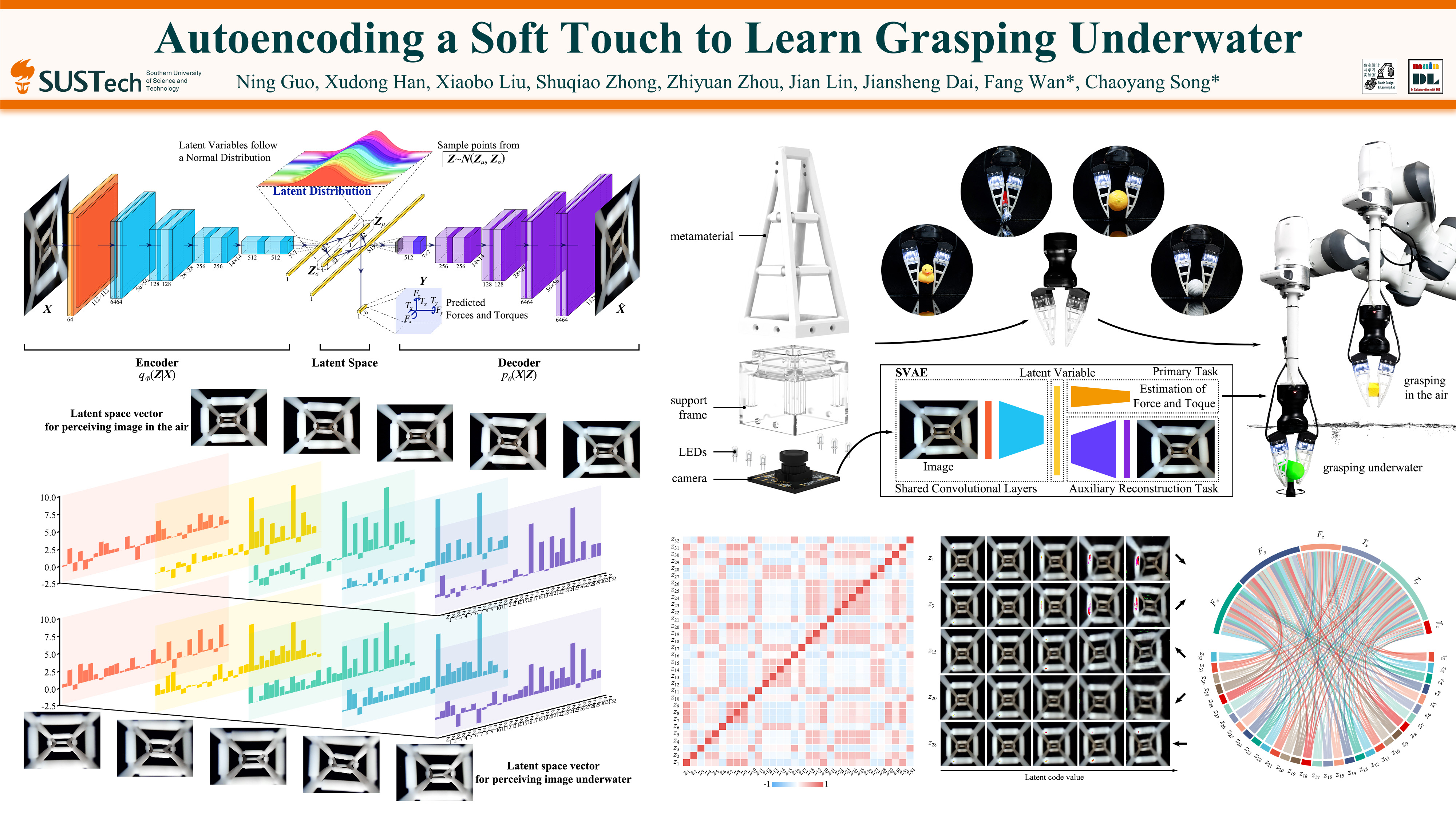

Autoencoding a Soft Touch to Learn Grasping from On-land to Underwater

Abstract: Robots play a critical role as the physical agent of human operators in exploring the ocean. However, it remains challenging to grasp objects reliably while fully submerging under a highly pressurized fluidic environment with little visible light, mainly due to the fluidic interference on the interactive physics at the finger and object surfaces. This study proposes a soft visual-tactile learning approach using a Supervised Variational Autoencoder(SVAE) to estimate the 6D force and torque (FT) of an adaptive finger design made from meta-materials when grasping underwater. We fix a high-framerate camera inside the finger network to track its whole-body deformation while interacting with physical objects. We train an SVAE model to establish a series of latent variables for accurate 6D force and torque estimation against commercial FT sensors. The sensorized soft finger enables us to perform reactive grasping under the water with tactile intelligence. Our results extend the use of SVAE in tactile perception for soft, delicate, and reactive grasping under the water, paving the pathway for future research in learning-based grasping intelligence for underwater robotics.

Amphibian Grasping with Visual-Tactile Soft Finger.

.

.

Real-time Force/Torque Prediction.

.

.

.

.