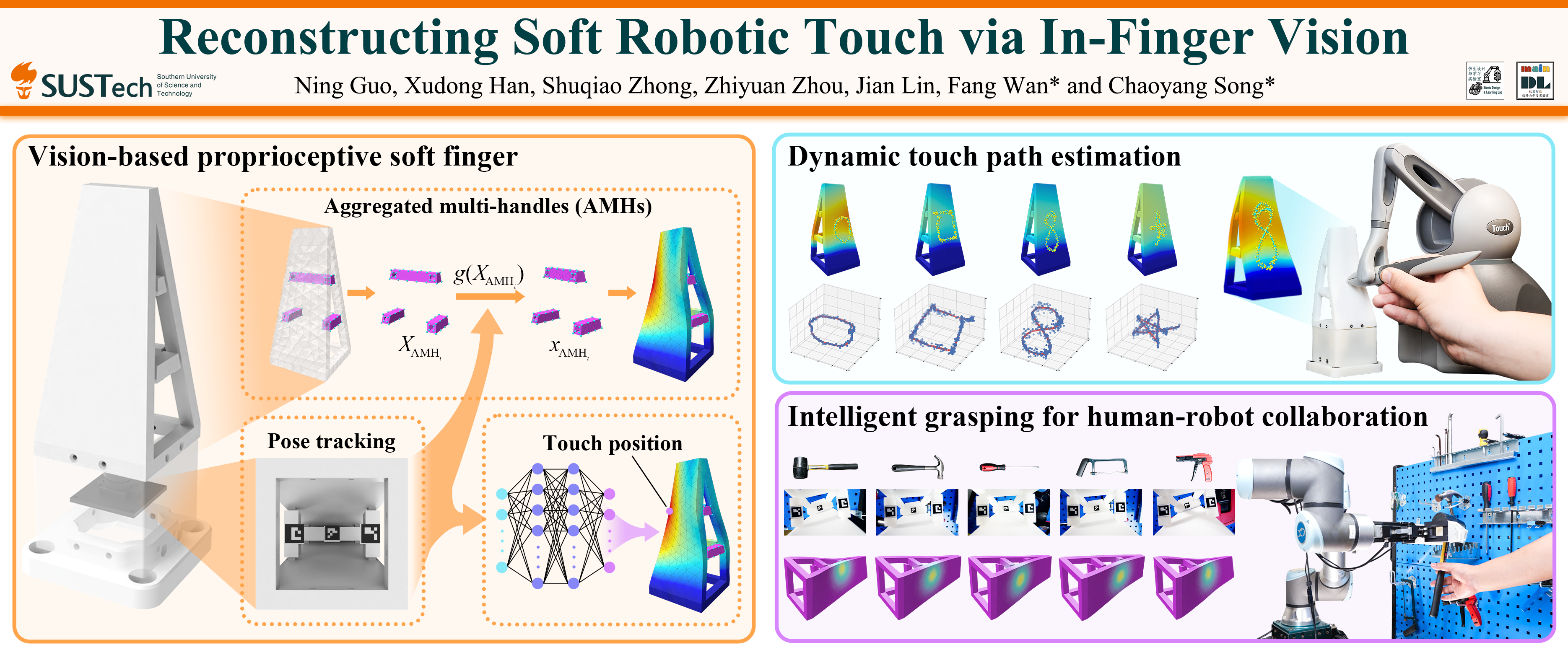

Reconstructing Soft Robotic Touch via In-Finger Vision

Abstract: Incorporating authentic tactile interactions into virtual environments presents a notable challenge for the emerging development of soft robotic metamaterials. This study introduces a vision-based approach to learning proprioceptive interactions by simultaneously reconstructing the shape and touch of a Soft Robotic Metamaterial (SRM) during physical engagements. The SRM design has been optimized to the size of a finger with enhanced adaptability in 3D interactions while incorporating a see-through viewing field inside, which can be visually captured by a miniature camera underneath to provide a rich set of image features for touch digitization. Employing constrained geometric optimization, we modeled the proprioceptive process with Aggregated Multi-Handles (AMHs). This approach facilitates real-time, precise, and realistic estimations of the finger’s mesh deformation within a virtual environment. We also proposed a data-driven learning model to estimate touch positions, achieving reliable results with impressive $R^2$ scores of 0.9681, 0.9415, and 0.9541 along the (x), (y), and (z) axes. Furthermore, we have demonstrated the robust performance of our proposed methods in touch-based human-cybernetic interfaces and human-robot collaborative grasping. This study opens the door to future applications in touch-based digital twin interactions through vision-based soft proprioception.

Dynamic Touch Path Sensing.

.

.

Re-Grasping with Tactile Sensing

.

.